Lab Resources

Empower Research and Education with Our Hardware Tools, Software Tools, and Datasets

Hardware Tools

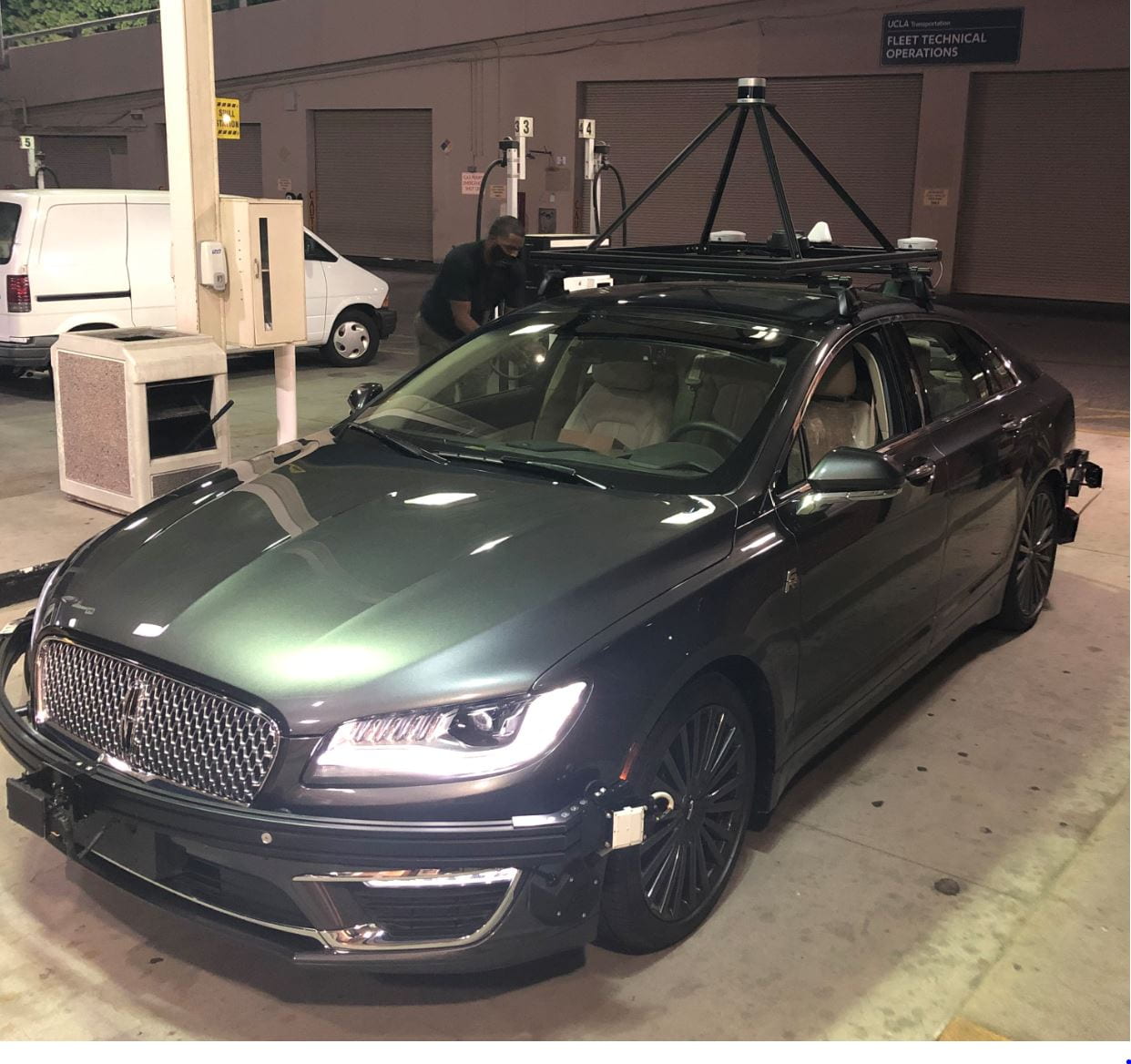

Autonomous Test Vehicles

- Two connected and automated vehicles. Each equipped with:

-

- X-by-wire systems

- 128-beam LiDAR

- ZED 2i stereo cameras positioned on the front, rear, left, and right sides of the vehicle for 360-degree field-of-view (FoV)

- Mako cameras installed inside the vehicle for driver monitoring

- Radars

- GNSS/IMU integrated localization system for precise positioning

- Supports single-vehicle autonomous driving and Cooperative Driving Automation (CDA) research

Smart Intersection

-

Safety-oriented smart intersection system developed and integrated at a UCLA campus intersection

-

Utilizes C-V2X, advanced sensors, and deep learning for real-time safety and perception

-

Follows a standard autonomous driving pipeline: sensing, perception, decision-making, and actuation

-

Employs multi-modal sensor fusion (LiDAR, cameras, radar) for robust, all-weather performance

-

Includes health monitoring for edge reliability and fault detection

-

Digital twin enables simulation-based training and real-time performance evaluation

-

Hardware units include LiDAR, cameras, radar, and GNSS for precise time synchronization

Driving Simulator

-

Driving simulator for studying human-autonomy interaction

-

Medium-fidelity setup with OpenCDA, CARLA, physical controls, and triple-monitor display

-

Multi-modal data collection: questionnaires, interviews, performance metrics

-

Evaluates manual-to-automated transitions in critical scenarios

-

Captures subjective data (trust, stress, attention) and objective data (reaction time, lane keeping, rule compliance)

-

Supports comprehensive driver behavior analysis

Computing

-

Several servers with GPUs and CPUs

-

Supports neural network training, large model training, digital twinning, and simulation tasks

-

Over 30 high-performance GPUs, including NVIDIA A6000s, A5000s, and L40S units

Quadruped Robots

- Two Unitree Go2 quadruped robot

- Contribute to vision language navigation and multi-robot cooperation research

Sensors

- Multiple multi-modal sensors, including:

- LiDAR: Ouster 128-channel, 64-channel and Robosense 128-channel LiDARs; self-developed software for channel downsizing to simulate lower-cost sensors

- Cameras: ZED 2i stereo cameras and 1080p RGB cameras.

- Radar: iSYS-5220 high-end traffic radar for reliable vehicle monitoring

- GNSS/IMU: Garmin GNSS for sensor synchronization; Novatel SPAN E1 and Gongji A802 for vehicle localization and state estimation

- Enabling autonomous driving and intelligent transportation tasks

Software Tools & Datasets

OpenCDA: Cooperative Driving Automation Simulation Platform

OpenCDA is an open-source simulation tool for developing and testing Cooperative Driving Automation (CDA) features, integrating CARLA (driving) and SUMO (traffic) simulators. It supports standard ADS functions—perception, localization, planning, and control—while enabling simulation of vehicle, infrastructure, and pedestrian cooperation. Developed in collaboration with U.S. DOT and the FHWA CARMA Program, OpenCDA allows both component-level and system-wide evaluations, and is designed to interface with CARMA XiL tools for advanced CDA research.

OpenCOOD: Open-Source Deep Learning Framework for Cooperative Driving

OpenCOOD is an Open COOperative Detection framework for autonomous driving. It provides SOTA cooperative detection algorithms, convenient APIs for the large-scale simulated V2V perception dataset OPV2V, and a set of useful tools for log replay. OpenCOOD supports multi-GPU training, provides easy data API for multiple popular multi-agent perception dataset, and allows users use different sensor modalities. It also provides multiple SOTA 3D detection backbone and supports SOTA multi-agent perception models for object detection and tracking for automated driving.

MobiVerse: Scaling Urban Mobility Simulation with Hybrid Lightweight Domain-Specific Generator and Large Language Models

MobiVerse is an advanced urban mobility simulation platform that integrates Large Language Models (LLMs) with transportation simulation to enable realistic human mobility decision-making at scale. The platform addresses critical limitations in existing mobility simulation approaches by providing a flexible framework that supports various activity and decision generation methods while incorporating LLM-powered behavioral adaptation. MobiVerse serves as a comprehensive research platform where users can integrate their own mobility modeling algorithms and test them with dynamic behavioral adaptation capabilities, enabling scalable simulations of large numbers of agents at city- and region-scale that can respond to environmental feedback in real time.

Vehicle-to-Vehicle Simulation Dataset: OPV2V

OPV2V is the first large-scale open dataset for vehicle-to-vehicle (V2V) perception, collected using the OpenCDA framework and CARLA simulator. It includes 73 diverse scenes across 9 cities and 6 road types, featuring 12K LiDAR and RGB frames with 230K annotated 3D bounding boxes. The dataset supports multi-vehicle sensor fusion and provides benchmarks using 4 LiDAR detectors and 4 fusion strategies (16 models total), enabling research in challenging scenarios like occlusions.

Vehicle-to-Vehicle Real-World Dataset: V2V4Real

V2V4Real is the first large-scale real-world dataset for vehicle-to-vehicle (V2V) cooperative perception in autonomous driving. Collected using two vehicles simultaneously, it provides multi-view LiDAR and RGB data across 410 km of driving. The dataset includes 20K LiDAR frames, 40K images, and 240K annotated 3D bounding boxes covering 5 vehicle classes. It features various road types, includes HD maps, and supports tasks like 3D object detection, tracking, and Sim2Real adaptation with state-of-the-art benchmarks.

GitHub

Vehicle-to-Everything Real-world Dataset: V2X-Real

V2X-Real is a large-scale dataset for vehicle-to-everything (V2X) cooperative perception, featuring multi-modal data from two connected vehicles and two smart infrastructures equipped with LiDAR and multi-view cameras. It includes 33K LiDAR frames, 171K RGB images, and over 1.2M annotated 3D bounding boxes across 10 categories in complex urban scenarios. The dataset supports diverse cooperative driving tasks—such as 3D object detection, tracking, and trajectory prediction—and includes HD maps and benchmarks with state-of-the-art models. It can be split into four subsets: vehicle-centric, infrastructure-centric, V2V, and I2I.